How to Web Scrape Fantasy Football Data

In this part of the intermediate series, learn how to automate scraping data from ProFootballReference to get seasonal and weekly Fantasy data.

In this part of the intermediate series, learn how to automate scraping data from ProFootballReference to get seasonal and weekly Fantasy data.

If you have any questions about the code here, feel free to reach out to me on Twitter or on Reddit.

If you like Fantasy Football and have an interest in learning how to code, check out our Ultimate Guide on Learning Python with Fantasy Football Online Course. Here is a link to purchase for 15% off. The course includes 15 chapters of material, 14 hours of video, hundreds of data sets, lifetime updates, and a Slack channel invite to join the Fantasy Football with Python community.

Welcome to part four of the series on Python for Fantasy Football Analysis! In this part of the series, we are going to begin a string of posts on how to actually get fantasy data. And not just get fantasy data, but how to automatically scrape fantasy data from the web.

We’re going to change it up a bit for the next couple parts. We’ll be writing a whole script this time instead of operating from our notebook environments (whether you were using colab or jupyter), so you’ll want to install a text editor if you don’t already have one.

I suggest downloading something lightweight such as Sublime or Atom. I personally use VSCode (and that’s what you see above in the video), but that’s geared more towards web development. Any of the three I mentioned above are sufficient.

Do note that this series is meant for people somewhat experienced with Python, or at the very least for someone who has some experience with another language. If you’re completely new to Python and want to follow along, that’s cool – but do know that I can’t explain everything for the sake of brevity. I’m writing a book on learning Python from scratch and am shooting for the beginning of March as the release date. (edit on March 31st, 2020: Oops, sorry guys. Life got kinda busy. Let's say beginning of May now)

Also, if you’re new to the series, you can probably follow along for this part if you’re just interested in learning how to web scrape. However, if you’re completely new to the library pandas I would recommend starting at part one, linked here.

With all the disclaimers out of the way, let’s get to coding!

Fire up your local terminal or command prompt, change directories to your project directory (wherever you were keeping your jupyter notebook files), and run the following command.

This command creates what’s called a “virtual environment”, which we call venv. For those unfamiliar with virtual environments, GeeksForGeeks.org could not have explained it better.

A virtual environment is a tool that helps to keep dependencies required by different projects separate by creating isolated python virtual environments for them.

This venv part is optional, however it is good practice. To activate the virtual environment on Mac or Linux, use the following command.

To activate the virtual environment on Windows, run the following command.

If you got a little (venv) to show up right before your project directory path on your terminal, you successfully activated the virtual environment.

Awesome! Now let’s download some dependencies. Run the following command in your terminal.

Wait for that to download, and then create a new .py file in your project directory. I named it webscraping.py, but you can name it whatever you like. And that’s it! Now, this entire post will be referencing that link to the Python file at the top of the post. Here it is one more time.

That was a lot of setup but now we can begin coding.

This is the first couple lines of our script. Just some basic import statements, however, I want to take this opportunity to explain the process by which we are scraping our fantasy data –

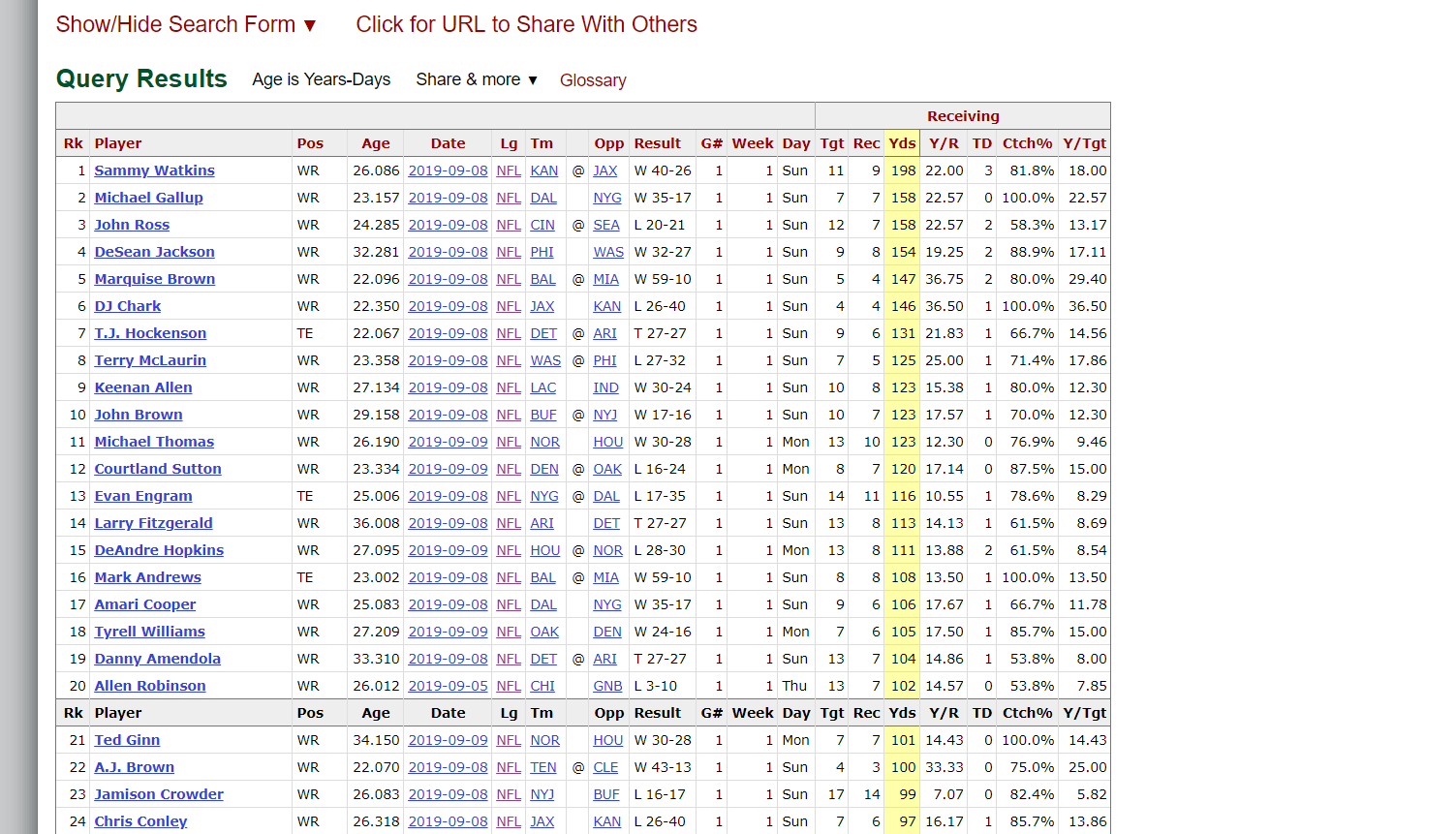

Above is an example of a table we’ll be parsing. Throwback to when Sammy Watkins scored like 50 points in week one! And then did nothing rest of season! Note: If you draft Sammy Watkins next season after reading my book and posts, I’m not liable for damages.

That was a lot of explaining for some import statements – but hopefully that makes the actual coding process easier to get through.

I mentioned above that we’ll be using profootballreference.com to scrape the data. I have to admit, it wasn’t the “best” choice, but it was the best out there for allowing me teach you guys some new concepts. The good news is that profootballreference provides weekly stats for TE, WR, RB, and QB going all the way back to 1999. Awesome! The bad news is that they separate each week into separate a web page based on rushing stats, receiving stats and passing stats. Yikes. So you’ll see that for any given week, we’ll have to make 3 HTTP GET requests each for rushing, receiving, and passing stats, create a DataFrame for each (using the process I outlined above), and then join the DataFrames together.

The whole process is indeed messy – that’s for sure. I chose to use PFR regardless, though, because (A), their HTML code is really simple and I didn’t want to waste too much time on the parsing process. And (B), it will allow me a chance to introduce you all to joining tables, which can be quite complicated but also essential.

For those unfamiliar with input, it’s a built in function that will momentarily stop a script from continuing and accept inputs from a user. We convert year and week to integers using the built-in python function int(), because input gives us back strings as a default.

Before we move on to our next block of code, let’s inspect the url pattern for an example week and year. Let’s say season 2019, week 1.

I’ve linked them below (Don’t bother opening them if you’re on mobile though, the url pattern is quite long).

If you’re on a desktop though, take a look at the url pattern at the top of your browser. Try to figure out a pattern or some key that can help us figure out how to find any given week’s web page by simply looking at the URL.

Okay, here it is. If you haven’t done web development before, you can pass in variables to a web page and subsequently to a backend server using “url parameters”. They look like this typically –

https://website.com/home?param=value

These parameters tell the page what to display. When you change these parameters, you typically change the page. Profootballreference uses season number and week number as parameters to show you, well, different weeks and different seasons. Here’s the URL for passing stats for 2019 for week 1.

It can be hard to tell at first, but they use the parameters, year_min and year_max to display a given year. We obviously want year_min and year_max to be equal to give us one year. They also use week_num_min and week_num_max to display a given week. Same thing here, we want these numbers to be equal, so as to get one week of data.

Here’s the code for dynamically building the URLs we are going to request from. This is the block of code included following the user inputs.

Really messy, but you can see we’re formatting these three strings with the inputs we got from the user in the command line to create three separate URLs to request from.

Here we create a dictionary, and later we’ll use the .items() dictionary method to iterate through and run the request, parse, and transform to DataFrame process I described for each of the three URLs.

Next, we create an empty dfs list that’ll hold our rushing DataFrame, receiving DataFrame, and passing DataFrame.

The last block of code is simply a dictionary of keyword arguments we’ll be passing to pandas multiple times. defColumnSettings is shorthand for default column settings. If you’re not super familiar with Python, you can pass in keyword arguments to a function with a dictionary using a special notation (double asterisks). In short, these two lines of code below are exactly equivalent. (This is not actually part of our source code, by the way)

Since we’ll be changing a lot of columns, we’ll be setting axis = 1 and inplace = True a lot, so I simply saved the keyword arguments to a dictionary, so we can pass them in using this shorthand notation. By the way, this is called “unpacking”, and it’s especially useful when you have a long list of arguments to pass to a function.

Yeah, that’s a lot of code, but don’t worry – It’s actually not that complicated. We’ve already gone over almost all the theory. Let’s break this code down line by line.

Remember when we created a dictionary with key, value pairs of key, url? Now we are iterating through them using a description of the url as a key, and then the actual url itself as the value. For those that don't know, Python dictionaries have a built-in method called .items() that allows you to iterate through a dictionary. Really useful.

We use requests here to make a HTTP GET request to our url and save the response to a variable we call response.

Here, we create a BeautifulSoup object and pass in response.content as an argument, along with ‘html.parser’. A HTTP response comes back with a bunch of stuff, but the two big ones are the status code and content (You might have seen a 404 status code before – the status code that indicates that a requested resource was not found). We use the content attribute to access, well, the content part of our response – which is actually our HTML. We also pass in ‘html.parser’ as our second argument. Don’t want to get too bogged down in the details, so I’ll just say that this argument tells BeautifulSoup to parse this as an HTML file. That’s it really.

Now, we use the built-in BeautifulSoup method called find to find the table we need by searching for a table element in the HTML code. If you’re unfamiliar with HTML, HTML code is made up of “elements” that make up an entire page. One such element is a <table> element that is used to make tables to store and display data like a pandas DataFrame. It just so happens that the data we need is located in a table element. Awesome! Moreover, some elements have id’s. Each id on a page should be unique, and this makes id’s incredibly useful for scraping as we can quickly find specific elements we need by searching by their unique id. Great news – our table has an id 🙂 We pass in {‘id’: ‘results’} to tell BeautifulSoup our table’s id. Note: Scraping isn’t always this easy. Sometimes you need to engineer some really “creative” code to be able to scrape a page. Again, the simplicity of PFR’s HTML code was the primary reason I chose it as our data source.

Above is a brief example of how I go through the process of scraping. Before scraping, you have to inspect the HTML code and find unique and identifiable characteristics to be able to scrape the page. You can see I found the table element and that it had an id=results value. You can inspect the page by right clicking and clicking inspect, or by opening up developer tools on your browser – each browser is different so this is something you’ll have to google yourself.

Pandas actually has this really neat function called read_html that can read straight from an HTML table and convert it to a DataFrame. We convert it to a string because that’s what pandas wants. Per the pandas documentation, read_html returns a list of DataFrame objects. Our list is a length of one – since we only have one table, and so we access our table by using [0]. And voila – we have our DataFrame. All we need to do now is clean it up.

If you examine any of our source tables from PFR’s website, you’ll notice we have what’s called a multi-column index – or something like that. In non-complicated terms, we have two rows where our column names go. We only need that bottom row to make life easy for us. Our column index has two levels right now, a top row and bottom row. We use df.columns = df.columns.droplevel(level=0) to drop the top row for each DataFrame. The top row is indexed as 0, much like a list’s first element is indexed as 0.

Dropping a bunch of columns. Using that unpacking notation to pass in axis=1 and inplace=True.

Examine one of our source tables from PFR’s site again and you’ll notice we have some rows where PFR repeats the column name values half way down the table to make it easier for humans to read the table. That’s really nice and generous of PFR, but of no use to us here. This line is filtering out that row. My decision to filter out based off the Pos column was arbitrary – you could have easily used any other column name.

Setting our index to three column values – this will be especially useful when we join the three DataFrames together. Setting inplace=True to save our changes.

This block is long, but pretty simple. We are using our key to alter our DataFrames based off what type of DataFrame it is – obviously, we want to make separate changes to our rushing DataFrame and separate changes to our passing DataFrame. I’m going to gloss over this part as we’ve covered all these concepts in parts one through three. At the end, we append our dfs list with our new DataFrame, and our for loop is done!

This is our next block of code. Looks simple, but that first line of code is quite complicated.

There’s a problem with our three DataFrames – we have duplicates across DataFrames. Lamar Jackson can throw and run the ball, so he shows up on both our rushing DataFrame and passing DataFrame. We want a way to combine these DataFrames while not having two separate rows for players like Lamar Jackson.

What we need to do is outer join these three tables. Now, I’ve decided the next part of this series is going to be on joining, merging, and concatenating as a discussion about these topics necessitates an entire post of it’s own. I’ll be covering these concepts in even more depth in my book. Here’s a great article that touches on all of these concepts. For now, I’m just going to tell you we outer join all three DataFrames – that’s all you need to know for now before this post turns into a novel.

This line fills all of our NA cells – those cells where a player has no recorded rushing, passing, or receiving stats to 0. Pandas gives us a neat function to easily do this called fillna(REPLACEMENT), and allows us to pass in a replacement value as an argument.

This line is to convert all values in our DataFrame to an integer. Pandas has another useful function called astype to be able to convert an entire DataFrame, column, or row to a specified data-type. astype has no inplace argument so we use the syntax df = df.some_transforming_function() to make a permanent change to our DataFrame.

We create a column for FantasyPoints and then reset our index before saving our DataFrame. You guys should be good on this. We’ve done this twice already in previous posts.

And finally we save our DataFrame! Remember when I was blabbering on about argv? We use it here to check if we pass in –save as a command line argument, and if so, save our DataFrame as a CSV using pandas’ to_csv function! I created a new directory for my datasets to keep stuff organized, but you can skip this step. We format our filename with the week and year to be able to differentiate our different datasets.

And lastly, we wrap all this in a try, except block in case argv[1] brings back an IndexError (This would happen when the user does not pass in –save as an argument).

To run the code, run the following command, and answer the user prompts and you’re good to go!

Congrats! You can now scrape Fantasy Football Data all the way back to 1999! Side-note: You’ll notice our model does not have fumbles 🙁 This isn’t that big a deal though as fumbles are only -2 fantasy points, but keep that in mind. I’ll try to come up with a solution by next part.

Some activities for you before we end this post

Thanks for reading! You guys are awesome!